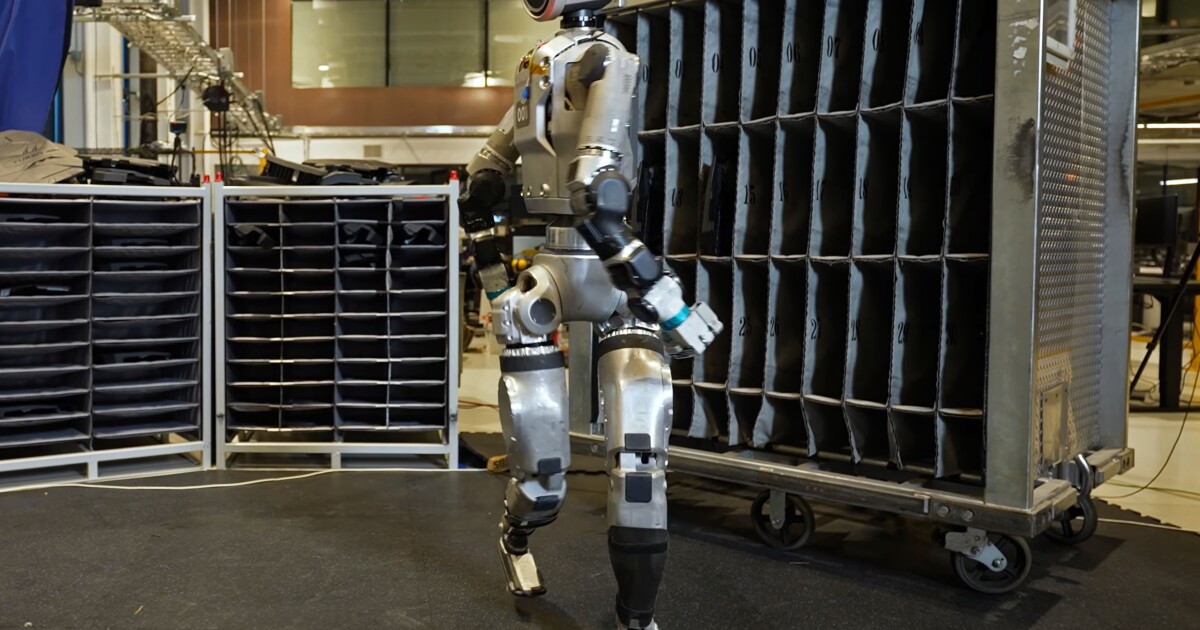

Watch: Atlas robot performs Exorcist head spins in pre-Halloween video

"Atlas uses a machine learning (ML) vision model to detect and localize the environment ... There are no prescribed or teleoperated movements; all motions are generated autonomously online. The robot is able to detect and react to changes in the environment (e.g., moving fixtures) and action failures (e.g., failure to insert the cover, tripping, environment collisions) using a combination of vision, force, and proprioceptive sensors."

Continue Reading

Category: AI & Humanoids, Technology

Tags: Boston Dynamics, Robot, Machine Learning, Artificial Intelligence

http://dlvr.it/TFvQPl

Continue Reading

Category: AI & Humanoids, Technology

Tags: Boston Dynamics, Robot, Machine Learning, Artificial Intelligence

http://dlvr.it/TFvQPl